@liuqiufeng

非常感谢,重新拉取1.5就没问题了。

另外,1.5容器自带的 application.example.yml 有点问题,启动会报错,如下:

Could not resolve placeholder 'console.user.username' in value "${console.user.username}"

配置中加上这个就可以了(这里没有用到console为啥还要配置?)

console:

user:

username: admin

password: admin

然后启动又报错,如下:

Could not resolve placeholder 'seata.security.secretKey' in value "${seata.security.secretKey}"

配置中加上这个就可以了

security:

secretKey: SeataSecretKey0c382ef121d778043159209298fd40bf3850a017

tokenValidityInMilliseconds: 1800000

ignore:

urls: /,/**/*.css,/**/*.js,/**/*.html,/**/*.map,/**/*.svg,/**/*.png,/**/*.ico,/console-fe/public/**,/api/v1/auth/login

我最终的配置文件如下:

server:

port: 7091

spring:

application:

name: seata-server

logging:

config: classpath:logback-spring.xml

file:

path: ${user.home}/logs/seata

extend:

logstash-appender:

destination: 127.0.0.1:4560

kafka-appender:

bootstrap-servers: 127.0.0.1:9092

topic: logback_to_logstash

console:

user:

username: admin

password: admin

seata:

config:

# support: nacos 、 consul 、 apollo 、 zk 、 etcd3

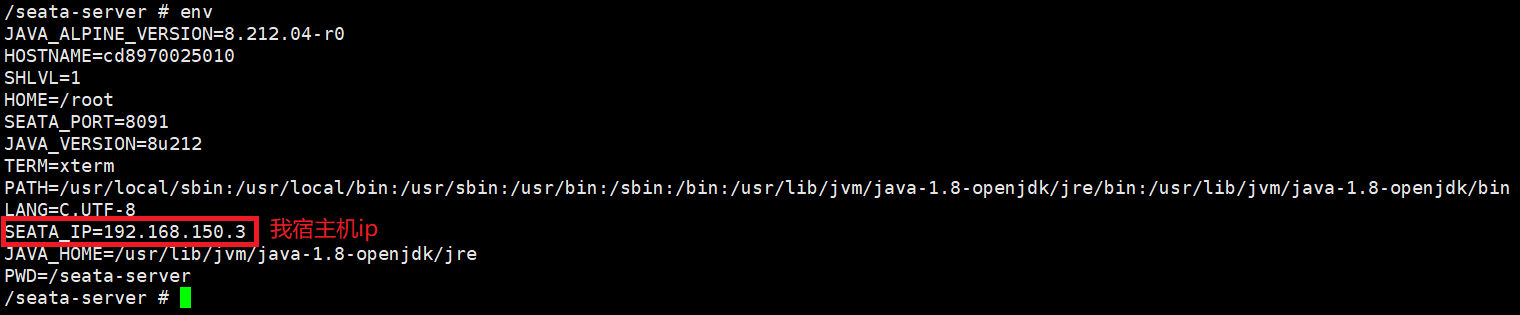

type: nacos

nacos:

server-addr: 192.168.150.3:8848

namespace:

group: SEATA_GROUP

username: nacos

password: nacos

##if use MSE Nacos with auth, mutex with username/password attribute

#access-key: ""

#secret-key: ""

data-id: seataServer.properties

consul:

server-addr: 127.0.0.1:8500

acl-token:

key: seata.properties

apollo:

appId: seata-server

apollo-meta: http://192.168.1.204:8801

apollo-config-service: http://192.168.1.204:8080

namespace: application

apollo-access-key-secret:

cluster: seata

zk:

server-addr: 127.0.0.1:2181

session-timeout: 6000

connect-timeout: 2000

username:

password:

node-path: /seata/seata.properties

etcd3:

server-addr: http://localhost:2379

key: seata.properties

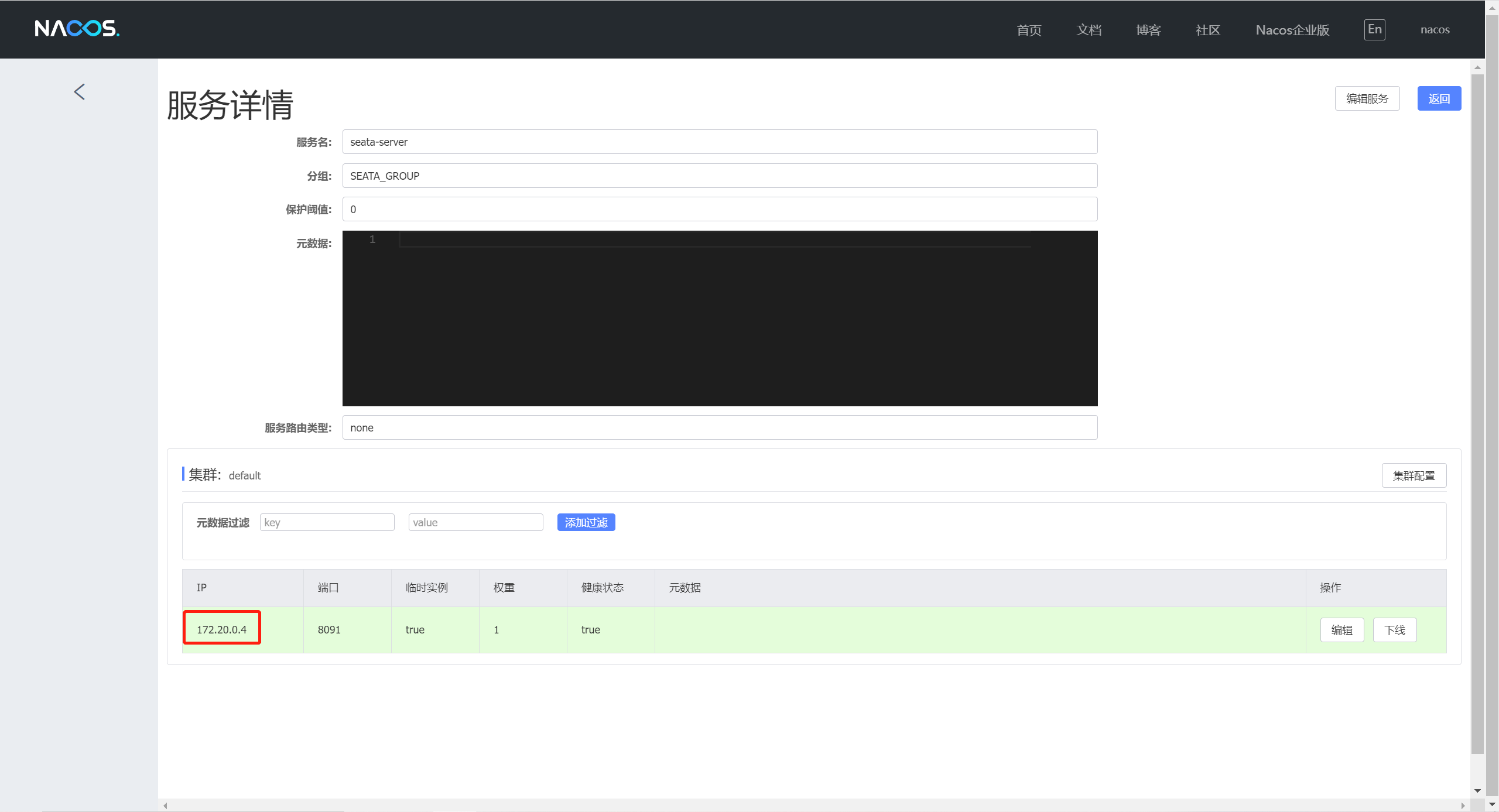

registry:

# support: nacos 、 eureka 、 redis 、 zk 、 consul 、 etcd3 、 sofa

type: nacos

preferred-networks: 30.240.*

nacos:

application: seata-server

server-addr: 192.168.150.3:8848

group: SEATA_GROUP

namespace:

cluster: default

username: nacos

password: nacos

##if use MSE Nacos with auth, mutex with username/password attribute

#access-key: ""

#secret-key: ""

eureka:

service-url: http://localhost:8761/eureka

application: default

weight: 1

redis:

server-addr: localhost:6379

db: 0

password:

cluster: default

timeout: 0

zk:

cluster: default

server-addr: 127.0.0.1:2181

session-timeout: 6000

connect-timeout: 2000

username: ""

password: ""

consul:

cluster: default

server-addr: 127.0.0.1:8500

acl-token:

etcd3:

cluster: default

server-addr: http://localhost:2379

sofa:

server-addr: 127.0.0.1:9603

application: default

region: DEFAULT_ZONE

datacenter: DefaultDataCenter

cluster: default

group: SEATA_GROUP

address-wait-time: 3000

server:

service-port: 8091 #If not configured, the default is '${server.port} + 1000'

max-commit-retry-timeout: -1

max-rollback-retry-timeout: -1

rollback-retry-timeout-unlock-enable: false

enableCheckAuth: true

retryDeadThreshold: 130000

xaerNotaRetryTimeout: 60000

recovery:

handle-all-session-period: 1000

undo:

log-save-days: 7

log-delete-period: 86400000

session:

branch-async-queue-size: 5000 #branch async remove queue size

enable-branch-async-remove: false #enable to asynchronous remove branchSession

store:

# support: file 、 db 、 redis

mode: db

session:

mode: db

lock:

mode: db

file:

dir: sessionStore

max-branch-session-size: 16384

max-global-session-size: 512

file-write-buffer-cache-size: 16384

session-reload-read-size: 100

flush-disk-mode: async

db:

datasource: druid

db-type: mysql

driver-class-name: com.mysql.jdbc.Driver

url: jdbc:mysql://192.168.150.3:3306/seata?rewriteBatchedStatements=true

user: root

password: 123456

min-conn: 5

max-conn: 100

global-table: global_table

branch-table: branch_table

lock-table: lock_table

distributed-lock-table: distributed_lock

query-limit: 100

max-wait: 5000

redis:

mode: single

database: 0

min-conn: 1

max-conn: 10

password:

max-total: 100

query-limit: 100

single:

host: 127.0.0.1

port: 6379

sentinel:

master-name:

sentinel-hosts:

metrics:

enabled: false

registry-type: compact

exporter-list: prometheus

exporter-prometheus-port: 9898

transport:

rpc-tc-request-timeout: 30000

enable-tc-server-batch-send-response: false

shutdown:

wait: 3

thread-factory:

boss-thread-prefix: NettyBoss

worker-thread-prefix: NettyServerNIOWorker

boss-thread-size: 1

security:

secretKey: SeataSecretKey0c382ef121d778043159209298fd40bf3850a017

tokenValidityInMilliseconds: 1800000

ignore:

urls: /,/**/*.css,/**/*.js,/**/*.html,/**/*.map,/**/*.svg,/**/*.png,/**/*.ico,/console-fe/public/**,/api/v1/auth/login